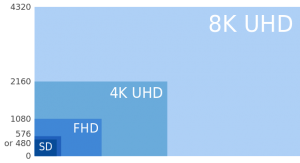

Despite being a bit of a geek, I feel I need to brush up my 4K basics to avoid seeming lame on such a hot topic. 2013 has already seen UHD/4K bloom at CES; now I need to be ready for IBC. So I had a long chat with Thierry Fautier who happens to be pretty knowledgeable on this. Here’s the transcript of our talk, in case you too want to seem less lame on the subject. 1. What exactly are 4K & UHD? 4K stands for “4 thousand” from the screen resolution of 2160 * 4096 pixels and is the new standard defined by the movie industry. The frame rate is still 24 fps, and the bit-depth remains 8 bit. UHD stands for Ultra High Definition and is supported by the broadcast and TV industries. It differs from 4K, with its greater color depth of 10 or 12 bits (which is a huge dynamic color range increase). The aspect ratio is brought back to the TV’s 16/9 ratio so it sports a 2160 * 3860 resolution. Frame rate is still a big debate. Some broadcasters are arguing that 120 fps are needed for football and that color depth should be 12 bits. The ITU specification gives a range of values, but the industry needs to rapidly agree and settle on some figures so that interoperability can be assured. Thierry’s company Harmonic believes that 4K with 10bit color depth @ 60 fps is a "good time-to-market and cost compromise" for the ecosystem. The following diagram gives a scale of the different quantity of data each screen size involves:

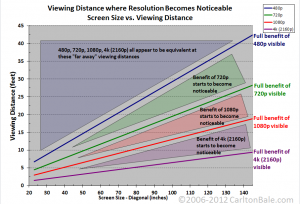

2. Will/should 4K and UHD merge? No, cinema workflow will stay separate even if you'll always get movies on your TV. Broadcasters will not accept such low frame rates on their own production. 3. What time frame do you see for adoption? The 2016 Olympic games will see the beginning of mass adoption. So we need field trials throughout 2015. That in turn means products must be on the market some time in 2014, which implies that we have to sort out the specs in 2013. The Brazil football world cup in 2014 will show a spike of interest but it’s too soon for any real impact. By EOY 2014 however there will be a range of TV sets and mass production can probably start in 2015. A recent Consumer Electronics Association study expects just 1M 4K screens in US in 2015. 4. "HD ready" or 720p preceded "Full HD" or 1080p. Will we see a similar 2K or something here? It seems that the UHD logo will be properly protected, so consumers should avoid confusion. Services like Netflix will target intermediate formats, and we will probably see an intermediate phase before UHD is launched with 1080p50/60. Indeed there is more and more content produced at 50/60 fps and workflows can support this. Once UHD STBs that can easily decode 1080p50760 are deployed, operators will be able deliver an optimized HD quality that will look much better on a 4K screen than today's 1080i would. 5. Are there any short-term stumbling blocks for CPE? The current HDMI 1.4 standard limits 4K to an unacceptable 30 fps. HDMI 2.0 is needed for 60 fps, and this will be the true kick-starter for 4K adoption. CES 2014 should see the consecration of HDMI 2.0. 6. Is the compression ratio linear (i.e. will UHD require exactly 8 times the bandwidth of HD)? No. Today’s HD streams are compressed to 6MPS at constant bit-rate. By the time it’s ready for mass adoption UHD @60fps with 10 bit color depth should require just under 20MPS. 7. Will UHD require HEVC or can it make sense to use H264? Without doubt HEVC is required for UHD to make it economically viable on existing infrastructure. 8. Apple created the marketing term Retina display. What would be the UHD screen size to call it that? Early testing shows that there is no benefit below a 65-inch screen. But we are framing the problem incorrectly. Try to watch HD on a 65-inch screen. You will see artifacts, so if you want a screen above 65 you need UHD! 9. In general, what's the new screen-size vs. optimal viewing distance? I argue with my colleagues in the UHD community who dream of people sitting 1,5m away from the screen. In reality I believe people will stay 3m away, so again the key factor is large screen size. Very large screens will be THE key success factor for UHD adoption. The figure bellow shows the screen size as a function of the viewing distance for various resolutions.  [Author's note: At its simplest it means that with a 50" screen you need to be 5 feet or 1,5 meters from the screen. For the more standard 10 foot or 3 meter viewing distance to really feel the 4K effect in your gut, you need an 85" screen.] 10. How will the upgrade from HD to UHD compare to the one we've been through from SD to HD, in terms of: a) content production / post production This will be a hard transition for broadcasters this time because there are no connector specs yet. But the cinema industry has been digitally mastering in 4K for a while so there are plenty of 4K movies ready for release. b) content acquisition / preparation This should be fine as much acquisition is already in 4K. c) encoding Except for some early prototypes, 4K encoding is not yet available in real-time, mainly due to lack of CPU power. IBC 2014 should have some products but they might not yet be cost-effective. d) transport / Broadcast I see no network issue for the satellite and cable guys, indeed several successful demos have already been done (like the Eutelsat demo still available on 10A). For Telcos UHD will be dedicated to fiber delivery and terrestrial will probably need to wait until around 2016-18 for DVB-T to be ready for 4K. e) decoding Broadcom chipsets will be widely available to decode 4K/10bit/60fpw by 2014 so the first mass produced STBs will be ready by 2015. f) content protection This discussion has only just started. For now Sony’s 4K content uses Marlin DRM, which is the only commercial service currently available. g) pricing The very first devices will probably carry a premium for encoders and STBs of a factor around 3-4 on the price tag vs HD, just like we saw in the early HD days vs. SD. h) customer proposition People aren't “dying for new screens” right now, but 4K could be a driver. The industry must convince consumers that much larger screens, where HD sucks, are a good thing. Otherwise 4K on a small screen isn't appealing enough. It's all about the large screen and being closer to it for a much more immersive sensation - without disturbing the brain the way 3D did (at least with glasses). Content and economic constraints will see 4K start life as a VOD experience as audiences will be too narrow to justify broadcast. This is where Telcos and Cable MSO come into play and I’m looking forward to talking to some of them about this at IBC 2013. Disclaimer I have no ongoing commercial relation with Harmonic; I just had easier access to Thierry than to Envivio, Ateme, Ericson, Elemental or any of the other reputable vendors in the space. And BTW I’m looking to do a similar debunking piece on HEVC, so ping me if you’d like to be my interviewee this time. BTW this 4K/UHD topic is one of the hot topics I identified for this year IBC here.

[Author's note: At its simplest it means that with a 50" screen you need to be 5 feet or 1,5 meters from the screen. For the more standard 10 foot or 3 meter viewing distance to really feel the 4K effect in your gut, you need an 85" screen.] 10. How will the upgrade from HD to UHD compare to the one we've been through from SD to HD, in terms of: a) content production / post production This will be a hard transition for broadcasters this time because there are no connector specs yet. But the cinema industry has been digitally mastering in 4K for a while so there are plenty of 4K movies ready for release. b) content acquisition / preparation This should be fine as much acquisition is already in 4K. c) encoding Except for some early prototypes, 4K encoding is not yet available in real-time, mainly due to lack of CPU power. IBC 2014 should have some products but they might not yet be cost-effective. d) transport / Broadcast I see no network issue for the satellite and cable guys, indeed several successful demos have already been done (like the Eutelsat demo still available on 10A). For Telcos UHD will be dedicated to fiber delivery and terrestrial will probably need to wait until around 2016-18 for DVB-T to be ready for 4K. e) decoding Broadcom chipsets will be widely available to decode 4K/10bit/60fpw by 2014 so the first mass produced STBs will be ready by 2015. f) content protection This discussion has only just started. For now Sony’s 4K content uses Marlin DRM, which is the only commercial service currently available. g) pricing The very first devices will probably carry a premium for encoders and STBs of a factor around 3-4 on the price tag vs HD, just like we saw in the early HD days vs. SD. h) customer proposition People aren't “dying for new screens” right now, but 4K could be a driver. The industry must convince consumers that much larger screens, where HD sucks, are a good thing. Otherwise 4K on a small screen isn't appealing enough. It's all about the large screen and being closer to it for a much more immersive sensation - without disturbing the brain the way 3D did (at least with glasses). Content and economic constraints will see 4K start life as a VOD experience as audiences will be too narrow to justify broadcast. This is where Telcos and Cable MSO come into play and I’m looking forward to talking to some of them about this at IBC 2013. Disclaimer I have no ongoing commercial relation with Harmonic; I just had easier access to Thierry than to Envivio, Ateme, Ericson, Elemental or any of the other reputable vendors in the space. And BTW I’m looking to do a similar debunking piece on HEVC, so ping me if you’d like to be my interviewee this time. BTW this 4K/UHD topic is one of the hot topics I identified for this year IBC here.

Update (november 11)

Kudos to Elemental who proudly announced the first real-time 4k transmission last week together with telco K-Opticom at 20MBPS - I'm told 12MBPS could have worked. It was for the Osaka marathon, perhaps not the most exacting of sports for TV, so the 30 frames per second limitations was probably not too much of an issue. Details on their press release here. Harmonic is showing 4K decoded at 60 fps on true CE device for the first time this week at inter BEE, but although the target frame-rate is here it'll still be 8-bit color, Harmonic sa the rest of the workflow isn't ready for 10 bit yet away... Seems like the FPS debate is closed as even Elemental people told be 60 is right for sports, but it looks like there's room for a future blog exploring the 8 vs. 10 bit color issue. Stay posted.